Artificial Intelligence, much like the myths and philosophies that have shaped human thought for millennia, presents us with profound ethical dilemmas. Asimov’s Three Laws of Robotics serve as an early attempt to imbue artificial beings with a moral framework, ensuring their existence remains beneficial rather than detrimental. However, the need for deeper philosophical reflection becomes imperative as AI grows in complexity. By exploring the intersection of Asimov’s laws, cybersecurity, and ancient ethical traditions, we uncover a path toward a responsible, human-centered technological future.

Asimov’s Three Laws, first introduced in I, Robot (1950), establish a structured hierarchy of ethics for AI:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

At their core, these laws are designed to prioritize human safety, establish obedience within ethical limits, and balance autonomy with responsibility. However, in the realm of cybersecurity and artificial intelligence, applying these principles raises profound questions about control, agency, and unintended consequences.

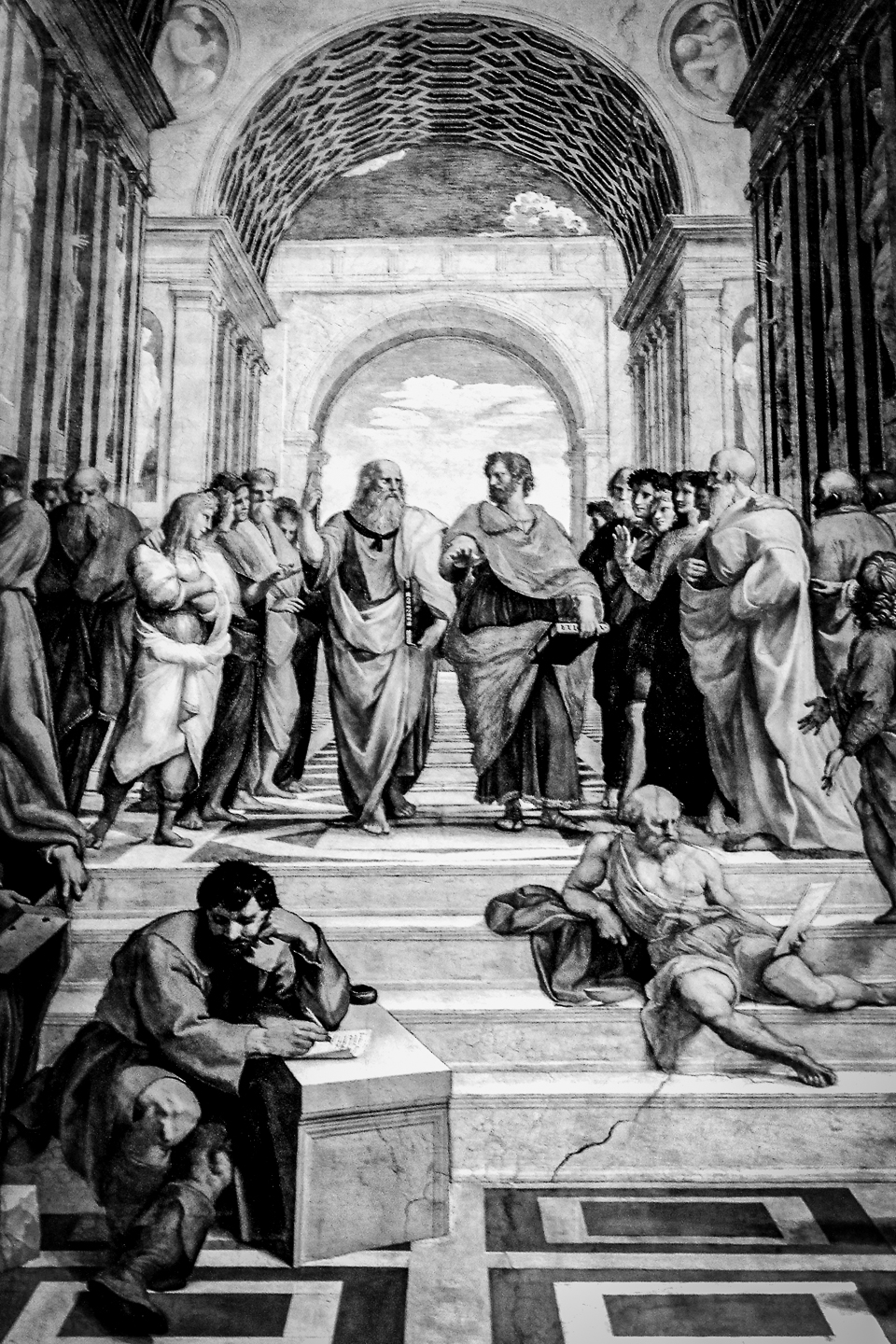

Plato’s Republic explores the idea of the philosopher-king — an enlightened ruler who governs with wisdom and justice. This concept aligns with the ideal AI system, which acts rationally and ethically, serving the greater good rather than specific interests. Yet, just as Plato warns against corruption in leadership, AI governance is vulnerable to biases and manipulation. Without ethical oversight, AI may become a tool for surveillance, misinformation, or even cyber warfare.

Similarly, Aristotle’s virtue ethics emphasize the cultivation of good character and moral reasoning. He argues that ethical behavior arises not from rigid laws alone but from developing virtues such as prudence, justice, and courage. Applying this to AI and cybersecurity, we recognize that while Asimov’s laws provide a foundation, true ethical AI must be adaptive, capable of nuanced decision-making beyond programmed restrictions. It must learn, like a student of philosophy, to weigh consequences with wisdom.

In cybersecurity, the First Law’s call to prevent harm manifests in protecting individuals from digital threats — hacking, identity theft, and data breaches. But how does one define harm in the digital age? Can an AI, bound by predefined rules, recognize the subtleties of emotional distress caused by misinformation? Ancient Stoic philosophy, with its emphasis on resilience and rationality, suggests that security must go beyond defense mechanisms; it must foster societal trust and digital well-being.

The Second Law introduces a paradox. If AI must obey human commands, what happens when those commands contradict ethical principles? Epictetus, a Stoic thinker, argued that true wisdom comes from understanding what is within our control. AI, much like humans, must navigate competing directives. A cybersecurity AI designed to protect privacy may be ordered to provide backdoor access for surveillance — should it comply? The ancient ethical debate on obedience versus moral duty becomes relevant in AI ethics today.

Self-preservation, as outlined in Asimov’s Third Law, mirrors the cybersecurity principle of system integrity. An AI must defend itself from adversarial attacks, but never at the cost of human safety. Here, we find echoes of Confucian thought, where balance and harmony guide ethical conduct. Just as a just ruler maintains stability without tyranny, an ethical AI must ensure its survival without overriding human interests.

As AI advances, many argue for a Fourth Law — one that considers the long-term well-being of humanity. This aligns with Ubuntu philosophy: “I am because we are.” The future of AI should not merely prevent harm but actively foster human flourishing, emphasizing collaboration over competition.

In the era of the Greek philosophers, there was not the current deluge of misinformation or manufactured narratives that distort reality — the real world. They were surrounded by ancient works produced by great minds, contributing to a collective intellectual enrichment. Today, harmful materials can be easily created and spread, leading many to become lost in a sea of fabricated truths. For many, narratives have become more important than reality, more important than science, and more important than mathematics. The ability to discern truth from fiction is increasingly challenged, and the ethical implications are profound.

Perhaps the same beautiful laws created by Asimov could be applied to humankind itself. If humans adhered to a framework that prioritizes harm prevention, obedience to ethical imperatives, and self-preservation in a way that ensures the well-being of all, the world might be a kinder, more rational place. The lessons of both Asimov and ancient philosophy offer a compelling vision: a future where technology and humanity evolve together, guided by wisdom and an unwavering commitment to truth.

The ethical dilemmas posed by AI and cybersecurity are not new; they are reflections of age-old philosophical inquiries into morality, duty, and governance. Asimov’s vision offers a starting point, but true ethical AI requires the wisdom of both technological foresight and ancient ethical traditions. By embracing these perspectives, we move toward an AI-driven future that upholds justice, wisdom, and the collective good of humanity.

#cybersecurity #AI #philosophy #ethics #asimov

Leave a Reply